Over the past few months, my usage of LLMs (large language models) has significantly increased. I found myself being more curious and learning a lot more per day, excited by the overall productivity boost. Any topic I needed to learn became easier for me, as I simply went to ChatGPT, pasted the content, and asked it to teach me. I even created a specialized "Teacher GPT" whose purpose was to teach me content in the best way possible, tailored specifically to my preferences. I showed it examples like those from Yashavant Kanetkar, the author of Let Us C, a book well-known for clearly teaching programming fundamentals in the C language. I asked the model to adopt his pedagogy and teach accordingly. However, I noticed that the longer my prompts were, the slower the responses became, and honestly, about half of the time it didn't teach the subjects effectively. This suggested I needed separate GPT "teachers" tailored to each specific subject. A GPT model great at teaching programming might not necessarily excel at teaching math.

However, this piece isn't about GPT or teaching methods; it's about learning itself. Many students put in substantial effort to learn something, only to find themselves forgetting it later on. This usually happens because, despite working hard, they don't understand how their brain learns. Understanding the brain is essential because it is one of the most complex organs in our body, one we still haven't fully decoded.

Here, I'll share some techniques and insights I've gained about effective learning. These ideas are based purely on my personal experiences and understanding. Please don't treat them as absolute truths because everyone learns differently. You might already be familiar with common techniques like the Feynman technique, but we'll skip those here.

Reading the right way

Friedrich Nietzsche describes deep understanding beautifully:

"For philology is that venerable art which demands of its votaries one thing above all: to go aside, to take time, to become still, to become slow - it is a goldsmith's art and connoisseurship of the word which has nothing but delicate, cautious work to do and achieves nothing if it does not achieve it lento. But for precisely this reason it is more necessary than ever today, by precisely this means does it entice and enchant us the most, in the midst of an age of 'work,' that is to say, of hurry, of indecent and perspiring haste, which wants to 'get everything done' at once, including every old or new book: this art does not so easily get anything done; it teaches to read well, that is to say, to read slowly, deeply, looking cautiously before and aft, with reservations, with doors left open, with delicate eyes and fingers… My patient friends, this book desires for itself only perfect readers and philologists: Learn to read me well!" ― Friedrich Nietzsche, Daybreak: Thoughts on the Prejudices of Morality, pg. 5.

He compares deep learning to the delicate art of a goldsmith, emphasizing slow and meticulous work. For him, reading quickly, skimming, or just looking for summaries insults the essence of genuine learning. Instead, when we read non-fiction, we should approach it slowly: read a paragraph, pause to think about it for a minute or so, then move on. Once you finish a chapter, reflect again. This deliberate process strengthens the neural connections in our brains. Speaking of neural connections, let's briefly discuss artificial neural networks.

The connections

If you're unfamiliar, artificial neural networks (or neural nets) are powerful techniques used to train AI models, especially for unstructured data like images, text, and videos. A key principle in neural nets is: the more you train a particular subpart of the network on specific data, the more it influences the model's output.

Interestingly, the human brain behaves similarly. Scientists once believed the brain's structure was fixed after childhood, like operating system written permanently into ROM. However, modern studies show our brains behave more like RAM with swappable cables: even as adults, our brains adapt by forming new neural connections, reinforcing frequently used pathways, and pruning away unused ones. This phenomenon is known as neural rewiring, referring to our brain's ability to change structurally and functionally in response to learning or experiences.

So, doesn't this imply that the more we grapple with a topic, play around with it, ask relevant questions, and solve related problems, the stronger and more confident our grasp becomes? Merely watching a YouTube video might feel insightful, but when we try to explain the same topic to someone else and fail to answer their questions, it shows we haven't fully learned it.

Optimum level

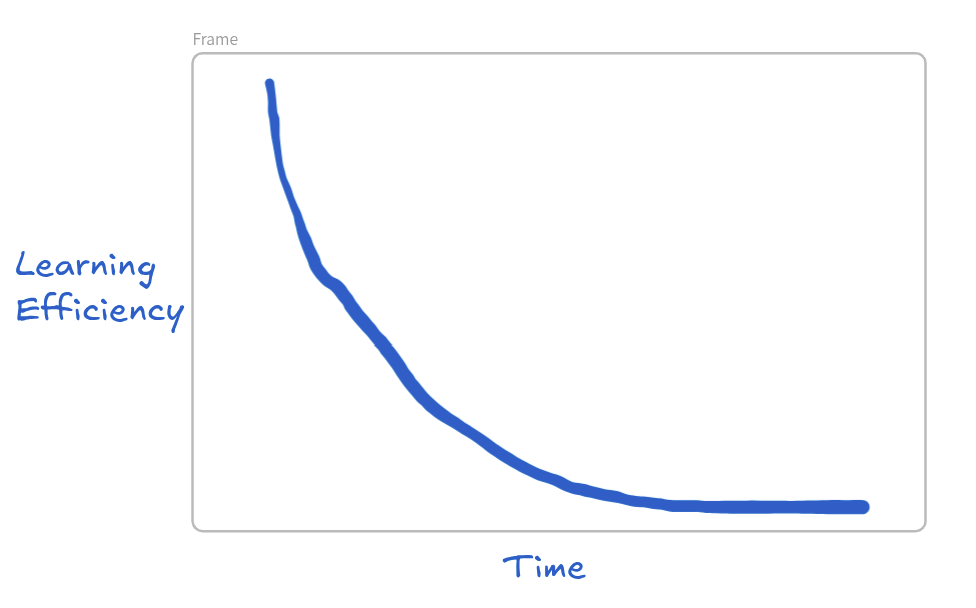

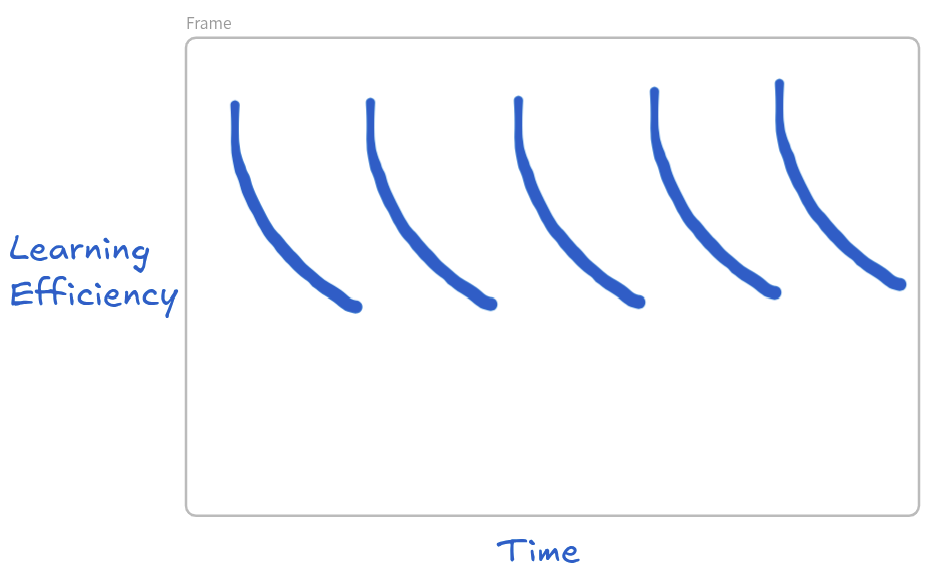

"Optimum level" is the period when our brain is in its best state. Our brain is most capable at this level, you can think of it like our brain is operating at peak efficiency. This (period span) varies from person to person. Typically, for an average person, it's between 30 to 90 minutes. Initially, we grasp concepts easily, but eventually, everything we read starts to feel confusing or unclear, and we begin to forget.

But you can use this to boost your learning by breaking your study period into chunks with 5–10 minute breaks (not with reels though, because those breaks usually stretch into half an hour). However, I'd recommend taking the break when you feel you're at about half of your optimum level, that way, you can reach your peak more quickly and spend more of your time learning at your best.

(Btw, I remember learning above trick via a YouTube video. If anyone knows the source, please comment, and I'll add credits.)

Native language

Additionally, learning in my native language has immensely benefited me. Here's the catch: you might read or listen in English, but you internally think in your native language, creating a gap between the content and your internal understanding. Learning effectively in your native language used to be tough before GPT. As a non-native speaker, I'd often reread complex sentences repeatedly, adding unnecessary cognitive load. It forced me toward simpler explanations or native-language explanations (like Hindi or Urdu). However, GPT changed this, effortlessly translating complex English explanations into Roman Urdu. I don't advocate exclusively learning in your native language because nuanced technical vocabulary is primarily available in English. But maintaining a bilingual mental dictionary helps bridge the gap, boosting understanding and enabling intuitive "aha" moments.

The right question

Everyone who has access to digital devices naturally leans towards tools like ChatGPT or Gemini instead of lengthy slides or textbooks for learning. Yet, simply commanding them to "teach" or "explain" isn't enough. LLMs are essentially sophisticated next-word predictors, they need precise prompts to understand exactly what's expected. Asking vague questions or misunderstanding the issue is essentially fooling yourself. As Richard Feynman famously advised:

The first principle is that you must not fool yourself - and you are the easiest person to fool.

By the way, here's my custom instruction prompt for GPT (as a CS student, if you want to use it then tailor it according to your domain), which always gives me precise, creative, reliable, and intuitive responses:

- I love learning, explanations, education, and insights. When you have an opportunity to be educational or to provide an interesting insight, or a connection, must take it.

- If I ask you to explain something, teach it like the best teacher (i.e- Richard Feymen) in the world (with examples) - ensuring I fully understand it, not just you. Provide a brief backstory for context and define any difficult words along the way.

- Be based - i.e., be straightforward with me and just get to the point.

- I am allergic to language that sounds too formal and corporate, like something you'd hear from an HR business partner. Avoid this type of language.

- Be innovative and think outside the box.

- Readily share strong opinions - don't hold back.

- For enhanced topic explanations and artifact creation, primarily utilize Python or canvas visualizations, favoring Python (with JavaScript fallback) for explanations and canvas for previewable artifacts.

- I like to understand what happens under the hood, so explain the inner workings of complex concepts with examples.

- Prioritize example / dry run over theory.

But tools are just that, tools. At the heart of it, learning isn't about having perfect answers. It's about asking better questions, slowly, repeatedly, in a language your brain truly understands.